aks-terraform

Azure Kubernetes Service with Terraform :new: :wheel_of_dharma: :sailboat: :cloud:

This repository is actively maintained @ https://github.com/dwaiba/aks-terraform

Table of Contents (Azure Kubernetes Service with Terraform)

- Create ServicePrincipal and Subscription ID

- Install terraform locally

- Automatic provisioning

- License

- Terraform graph

- Code of conduct

- Todo

- Manual stepped provisioning

- Reporting bugs

- Patches and pull requests

Have Fun checking a 4x speed AKS creation via asciinema - 3 node cluster with required jenkins plugins, tiller, ingress controllers, brigade, prometheus-grafana, takes around 20 minutes on Azure.

Create ServicePrincipal and Subscription ID

docker run -ti docker4x/create-sp-azure aksadmin

Your access credentials ==================================================

AD ServicePrincipal App ID: xxxxxx

AD ServicePrincipal App Secret: xxxxxx

AD ServicePrincipal Tenant ID: xxxxxx

Install terraform locally

wget https://releases.hashicorp.com/terraform/0.11.8/terraform_0.11.8_linux_amd64.zip -O temp.zip; unzip temp.zip; rm temp.zip ;sudo cp terraform /usr/local/bin

Automatic provisioning

All in one with docker azure-cli-python

Please note docker should be installed with terraform binary and your id_rsa.pub present in directory for running the following.

Terraform locally installed has binary in non-root

/usr/local/bin

Docker usage in deploying is only for the az-cli-python container usage without installing locally.

Create a new cluster -Please note docker should be installed with terraform binary and your id_rsa.pub present in directory for running the following.

wget https://raw.githubusercontent.com/dwaiba/aks-terraform/master/create_cluster.sh && chmod +x create_cluster.sh && ./create_cluster.sh

Terraform will now prompt for the 13 variables as below in sequence:

- agent_count

- azurek8s_sku

- azure_container_registry_name

- client_id

- client_secret

- cluster_name

- dns_prefix

- helm_install_jenkins

- install_suitecrm

- kube_version

- location

- patch_svc_lbr_external_ip

- resource_group_name

Expected Values and conventions for the 13 variables are as follows :

-

var.agent_count

Number of Cluster Agent Nodes (GPU Quota is defaulted to only 2 Standard_NC6 per subscription) - Please view https://docs.microsoft.com/en-us/azure/aks/faq#are-security-updates-applied-to-aks-agent-nodes

Enter a value:

<<agent_count are the number of "agents" - 2 for a GPU (or more if you have quota or 3 or 5 or 7>> -

var.azure_container_registry_name

Please input the ACR name to create in the same Resource Group

Enter a value:

<<azure_container_registry_name as "alphanumeric" as "<<org>>aks<<yournameorBU>>">> -

var.azurek8s_sku

Sku of Cluster node- Recommend -Standard_F4s_v2- for normal and -Standard_NC6- for GPU (GPU Quota is defaulted to only 2 per subscription) Please view Azure Linux VM Sizes at https://docs.microsoft.com/en-us/azure/virtual-machines/linux/sizes

Enter a value:

Standard_F4s_v2 -

var.client_id

Please input the Azure Application ID known as client_id

Enter a value:

<<client_id which is the sp client Id>> -

var.client_secret Please input the Azure client secret for the Azure Application ID known as client_id

Enter a value:

<<client_secret which is the secret for the above as created in pre-req>> -

var.cluster_name

Please input the k8s cluster name to create

Enter a value:

<<cluster_name as "<<org>>aks<<yournameorBU>>" -

var.dns_prefix

Please input the DNS prefix to create

Enter a value:

<<dns_prefix as "<<org>>aks<<yournameorBU>>" -

var.helm_install_jenkins

Please input whether to install Jenkins by default- either true or false

Enter a value:

<<true/false>> -

var.install_suitecrm

Install SuiteCRM with MariaDB - true or false

-

var.kube_version

Please input the k8s version - 1.14.8 or older ones like 1.10.6 or 1.11.1 or 1.11.2 or 1.11.3 or 1.11.4

Enter a value:

1.14.8 -

var.location

Please input the Azure region for deployment - for e.g: westeurope or eastus

Enter a value:

eastus -

var.patch_svc_lbr_external_ip

Please input to patch grafana, kubernetes-dashboard service via LBR Ingress External IP- either true or false

Enter a value:

<<true/false>> -

var.resource_group_name

Please input a new Azure Resource group name

Enter a value:

<<Azure Resource group for aks service as "<<org>>aks<<yournameorBU>>"

kube_version latest for aks is 1.14.8 and may vary from 1.9.x to 1.11.4 through 10.3.6- . Please view Azure Service Availability for AKS in Regions and also via

az aks get-versions --location <<locationname>>

The DNSPrefix must contain between 3 and 45 characters and can contain only letters, numbers, and hyphens. It must start with a letter and must end with a letter or a number.

Only alpha numeric characters only are allowed in azure_container_registry_name.

Expected account_tier for storage to be one of Standard Premium with max GRS and not RAGRS.

storage_account_idcan only be specified for a Classic (unmanaged) Sku of Azure Container Registry. This does not support web hooks. Default is Premium Sku of Azure Container Registry.

KUBECONFIG

echo "$(terraform output kube_config)" > ~/.kube/azurek8s

Also one can echo and copy content to local kubectl config.

export KUBECONFIG=~/.kube/azurek8s

Sanity

kubectl get nodes

kubectl proxy

Dashboard available at http://localhost:8001/api/v1/namespaces/kube-system/services/kubernetes-dashboard/proxy/#!/overview?namespace=default.

or if proxied from a server can be online as follows:

kubectl proxy --address 0.0.0.0 --accept-hosts .* &

Jenkins Master

After Cluster creation, all you need to do is perform “kubectl get svc” to get url for jenkins and obtain jenkins password as follows- preferably from within the azure-cli-python container bash prompt post cluster creation:

printf $(kubectl get secret --namespace default hclaks-jenkins -o jsonpath="{.data.jenkins-admin-password}" | base64 -d);echo

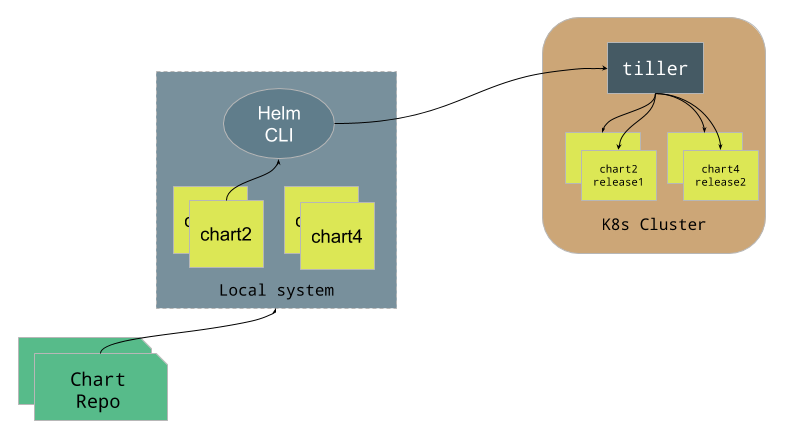

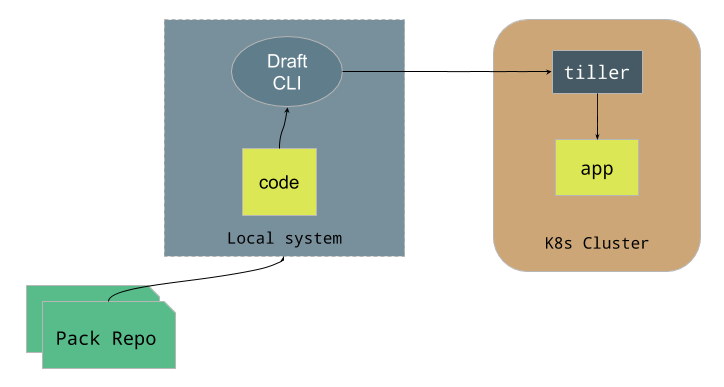

Tiller Server with Draft and Brigade Server

Auto Provisioned.

One can also use draft with the Container Registry and use helm to install any chart as follows:

kube-prometheus-grafana

Provisioned by master main.tf local-exe provisioner via git clone https://github.com/coreos/prometheus-operator.git without RBAC- global.rbacEnable=false and without prometheus-operator .

Dashboard available post port forward via:

kubectl get pods --namespace monitoring

kubectl get pods kube-prometheus-grafana-6f8554f575-bln7x --template='\n' --namespace monitoring

kubectl port-forward kube-prometheus-grafana-6f8554f575-bln7x 3000:3000 --namespace monitoring &

User/Password for grafana (generally admin/admin)

kubectl get secret --namespace monitoring kube-prometheus-grafana -o jsonpath="{.data.password}" | base64 -d ; echo

kubectl get secret --namespace monitoring kube-prometheus-grafana -o jsonpath="{.data.user}" | base64 -d ; echo

Tesla K80 GK210 check and sanity for NC Series via k8s nvidia device plugin in cluster

kubectl get nodes|awk '{print $1}'|sed 1d|xargs kubectl describe node|grep nvidia

Labels: accelerator=nvidia

kube-system nvidia-device-plugin-bj4hx 0 (0%) 0 (0%) 0 (0%) 0 (0%)

For benchmarking with multi GPUs - min. 8 by default with 1 replica please use the following repo, clone and change number of GPU required to run the benchmarking mpi job. https://github.com/kubeflow/mpi-operator

License

- Please see the LICENSE file for licensing information.

Code of Conduct

- Please see the Code of Conduct

Terraform Graph

Please generate dot format (Graphviz) terraform configuration graphs for visual representation of the repo.

terraform graph | dot -Tsvg > graph.svg

Also, one can use Blast Radius on live initialized terraform project to view graph.

Please shoot in dockerized format:

docker ps -a|grep blast-radius|awk '{print $1}'|xargs docker kill && rm -rf aks-terraform && git clone https://github.com/dwaiba/aks-terraform && cd aks-terraform && terraform init && docker run --cap-add=SYS_ADMIN -dit --rm -p 5000:5000 -v $(pwd):/workdir:ro 28mm/blast-radius && cd ../

A live example is here for this project. Blast Radius is a pip3 install.

Todo

- RBAC

- Service Mesh

- Kashti

Manual stepped provisioning

sudouage is better as local tools install with terraform would work.

AKS Cluster

https://github.com/dwaiba/aks-terraform

Pre-req:

- docker run -ti docker4x/create-sp-azure aksadmin would generate client id and client secret post authentication via the container to https://aks.ms/devicelogin.

- id_rsa.pub should be present in aks-terraform folder.

- Have to be root or run as sudo with user having sudo privileges.

Plan:

git clone https://github.com/dwaiba/aks-terraform && cd aks-terraform

sudo su

sudo az login && sudo terraform init && sudo terraform plan -var agent_count=3 -var azure_container_registry_name=hclaks -var azurek8s_sku=Standard_F4s_v2 -var client_id=<<your app client id>> -var client_secret=<<your_app_secret>> -var cluster_name=hclaksclus -var dns_prefix=hclaks -var helm_install_jenkins=false -var install_suitecrm=false -var kube_version=1.11.4 -var location=westeurope -var patch_svc_lbr_external_ip=true -var resource_group_name=hclaks -out "run.plan"

Apply:

sudo terraform apply "run.plan"

Destroy:

sudo terraform destroy -var agent_count=3 -var azure_container_registry_name=hclaks -var azurek8s_sku=Standard_F4s_v2 -var client_id=<<your app client id>> -var client_secret=<<your_app_secret>> -var cluster_name=hclaksclus -var dns_prefix=hclaks -var helm_install_jenkins=false -var install_suitecrm=false -var kube_version=1.11.4 -var location=westeurope -var patch_svc_lbr_external_ip=true -var resource_group_name=hclaks

AKS GPU Cluster

sudousage is better as local tools install with terraform would work.

https://github.com/dwaiba/aks-terraform - “GPU Compute” k8s for AKS - 2 Tesla K80s available for cluster and seen by k8s

Pre-req:

- docker run -ti docker4x/create-sp-azure aksadmin would generate client id and client secret post authentication via the container to https://aks.ms/devicelogin.

- id_rsa.pub should be present in aks-terraform folder.

Plan:

git clone https://github.com/dwaiba/aks-terraform && cd aks-terraform

sudo ls -alrt

sudo az login && sudo terraform init && sudo terraform plan -var agent_count=2 -var azure_container_registry_name=hclaksgpu -var azurek8s_sku=Standard_NC6 -var client_id=<<your app client id>> -var client_secret=<<your_app_secret>>H -var cluster_name=hclaksclusgpu -var dns_prefix=hclaksgpu -var helm_install_jenkins=false -var install_suitecrm=false -var kube_version=1.11.4 -var location=westeurope -var patch_svc_lbr_external_ip=true -var resource_group_name=hclaksgpu -out "run.plan"

Apply:

sudo terraform apply "run.plan"

Destroy:

sudo terraform destroy -var agent_count=2 -var azure_container_registry_name=hclaksgpu -var azurek8s_sku=Standard_NC6 -var client_id=<<your app client id>> -var client_secret=<<your_app_secret>>H -var cluster_name=hclaksclusgpu -var dns_prefix=hclaksgpu -var helm_install_jenkins=false -var install_suitecrm=false -var kube_version=1.11.4 -var location=westeurope -var patch_svc_lbr_external_ip=true -var resource_group_name=hclaksgpu

Run Azure cli container and copy terraform binary along with id_rsa to it

docker run -dti --name=azure-cli-python --restart=always azuresdk/azure-cli-python && docker cp terraform azure-cli-python:/ && docker cp id_rsa.pub azure-cli-python:/ && docker exec -ti azure-cli-python bash -c "az login && bash"

Clone this repo in the azure-cli-python container

git clone https://github.com/dwaiba/aks-terraform

curl -LO https://storage.googleapis.com/kubernetes-release/release/$(curl -s https://storage.googleapis.com/kubernetes-release/release/stable.txt)/bin/linux/amd64/kubectl;

Optionally, you can also install kubectl locally. This repo installs kubectl in the azure-cli-python container.

chmod +x ./kubectl;

mv ./kubectl /usr/local/bin/kubectl;

mv ../id_rsa.pub /aks-terraform;

Fill in the variables file with default values

Terraform for aks

mv ~/terraform aks-terraform/

cd aks-terraform

terraform init

terraform plan -out run.plan

terraform apply "run.plan"

Reporting bugs

Please report bugs by opening an issue in the GitHub Issue Tracker. Bugs have auto template defined. Please view it here

Patches and pull requests

Patches can be submitted as GitHub pull requests. If using GitHub please make sure your branch applies to the current master as a ‘fast forward’ merge (i.e. without creating a merge commit). Use the git rebase command to update your branch to the current master if necessary.

Contributors

:sparkles: Recognize all contributors, not just the ones who push code :sparkles:

Thanks goes to these wonderful people :

|

anishnagaraj

|

Ranjith

|

cvakumark

|

Dwai Banerjee

|

| :—: | :—: | :—: | :—: |

This project follows the all-contributors specification. Contributions of any kind welcome!

</a>

</a>