aws-terraform

EKS

Table of Contents (EKS and/or AWS RHEL77/centos77 with disks farm with Terraform in any region)

- EKS and/or AWS bastion user-data with Terraform - RHEL 7.7 and CentOS 7.7 in all regions with disk and with tools

- login

- Automatic provisioning

- Create a HA k8s Cluster as IAAS

- Reporting bugs

- Patches and pull requests

- License

- Code of conduct

EKS TL;DR

:beginner: Plan:

terraform init && terraform plan -var aws_access_key=<<ACCESS KEY>> -var aws_secret_key=<<SECRET KEY>> -var count_vms=0 -var disk_sizegb=30 -var distro=centos7 -var key_name=testdwai -var elbcertpath=~/Downloads/testdwaicert.pem -var private_key_path=~/Downloads/testdwai.pem -var region=us-east-1 -var tag_prefix=k8snodes -out "run.plan"

:beginner: Apply:

terraform apply "run.plan"

:beginner: stackDeploy with aws ingress controller, EFK, prometheus-operator, consul-server/ui:

export KUBECONFIG=~/aws-terraform/kubeconfig_test-eks && ./deploystack.sh && cd helm && terraform init && terraform plan -out helm.plan && terraform apply helm.plan && kubectl apply -f kubernetes-manifests.yaml && kubectl apply -f all-in-one.yaml

:beginner: Destroy stack:

export KUBECONFIG=~/aws-terraform/kubeconfig_test-eks && kubectl delete -f kubernetes-manifests.yaml && kubectl delete -f all-in-one.yaml && terraform destroy --auto-approve

:beginner: Destroy cluster and other aws resources:

terraform destroy -var aws_access_key=<<ACCESS KEY>> -var aws_secret_key=<<SECRET KEY>> -var count_vms=0 -var disk_sizegb=30 -var distro=centos7 -var key_name=testdwai -var elbcertpath=~/Downloads/testdwaicert.pem -var private_key_path=~/Downloads/testdwai.pem -var region=us-east-1 -var tag_prefix=k8snodes --auto-approve

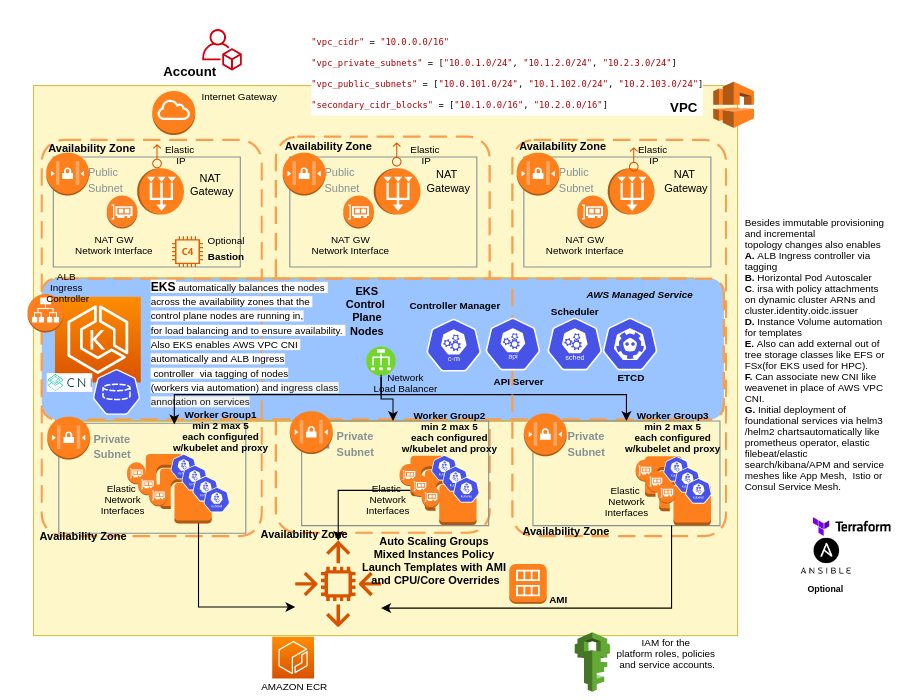

Topology

Modules and Providers

modules

cloudposse/ecr/aws 0.19.0 for ecr

- ecr in .terraform/modules/ecr/terraform-aws-ecr-0.19.0 git::github.com/cloudposse/terraform-null-label.git?ref=tags/0.16.0 for ecr.label

- ecr.label in .terraform/modules/ecr.label terraform-aws-modules/eks/aws 12.1.0 for eks-cluster

- eks-cluster in .terraform/modules/eks-cluster/terraform-aws-eks-12.1.0

eks-cluster.node_groups in .terraform/modules/eks-cluster/terraform-aws-eks-12.1.0/modules/node_groups

Instance templates are being used from .terraform/modules/eks-cluster/terraform-aws-eks-12.1.0

- terraform-aws-modules/iam/aws 2.12.0 for iam_assumable_role_admin

- iam_assumable_role_admin in .terraform/modules/iam_assumable_role_admin/terraform-aws-iam-2.12.0/modules/iam-assumable-role-with-oidc terraform-aws-modules/iam/aws 2.12.0 for iam_assumable_role_with_oidc

- iam_assumable_role_with_oidc in .terraform/modules/iam_assumable_role_with_oidc/terraform-aws-iam-2.12.0/modules/iam-assumable-role-with-oidc terraform-aws-modules/s3-bucket/aws 1.9.0 for s3_bucket_for_logs

- s3_bucket_for_logs in .terraform/modules/s3_bucket_for_logs/terraform-aws-s3-bucket-1.9.0 terraform-aws-modules/vpc/aws 2.44.0 for vpc

- vpc in .terraform/modules/vpc/terraform-aws-vpc-2.44.0

provider plugins

- plugin for provider “kubernetes” (hashicorp/kubernetes) 1.11.3

- plugin for provider “null” (hashicorp/null) 2.1.2

- plugin for provider “template” (hashicorp/template) 2.1.2

- plugin for provider “local” (hashicorp/local) 1.4.0

- plugin for provider “random” (hashicorp/random) 2.3.0

- plugin for provider “aws” (hashicorp/aws) 2.70.0

- plugin for provider “helm” (hashicorp/helm) 1.2.3

EKS and/or AWS bastion user-data with Terraform - RHEL 7.7 and CentOS 7.7 in all regions with disk and with tools

- Download and Install Terraform

- Create new pair via EC2 console for your account and region (us-east-2 default) and use the corresponding

Key pair namevalue in the console forkey_namevalue invariable.tfwhen performingterraform plan -out "run.plan". Please keep you private pem file handy and note the path. One can also create a seperate certificate from the private key as follows to be used with the elb secure portopenssl req -new -x509 -key privkey.pem -out certname.pem -days 3650. - Collect your

AWS_ACCESS_KEY_ID,AWS_SECRET_ACCESS_KEY="<< >>"

You can generate new ones from your EC2 console via the url for your

<<account_user>>-https://console.aws.amazon.com/iam/home?region=us-east-2#/users/<<account_user>>?section=security_credentials.

- Ingress allowance rule is for all and for

remote-exevia ssh agentless to run locally in the project to target server - from the ec2 console for the region - us-east-1 or any other region explicitly that you are passing as paramameter. Please make sure to have the private key created or public key imported as a security key for the passed region git clone https://github.com/dwaiba/aws-terraform && cd aws-terraform && terraform init && terraform plan -out "run.plan" && terraform apply "run.plan".

Post provisioning Automatic

curl http://169.254.169.254/latest/user-data|sudo sh- via terraformremote-execexecutesprep-centos7.txtshell-scriptfile contents of this repo available as user-data, post provisioning. Various type besidesshell-scriptincluding directcloud-initcommands may be passed as multipart as part of the user-data via terraformremote-exec.

- To destroy

terraform destroy

AWS RHEl 7.7 AMIs per regios as per

aws ec2 describe-images --owners 309956199498 --query 'Images[*].[CreationDate,Name,ImageId,OwnerId]' --filters "Name=name,Values=RHEL-7.7?*GA*" --region <<region-name>> --output table | sort -r- Red Hat Soln. #15356

AWS CentOS 7.7 AMIs per regios as per

aws ec2 describe-images --query 'Images[*].[CreationDate,Name,ImageId,OwnerId]' --filters "Name=name,Values=CentOS*7.7*x86_64*" --region <<region-name>> --output table| sort -r

AWS CentOS AMIs per regions used in map is as per maintained CentOS Wiki

Login

As per Output intructions for each DNS output.

chmod 400 <<your private pem file>>.pem && ssh -i <<your private pem file>>.pem ec2-user/centos@<<public address>>

:high_brightness: Automatic Provisioning

https://github.com/dwaiba/aws-terraform

:beginner: Pre-req:

- private pem file per region available locally and has chmod 400

- AWS Access key ID, Secret Access key should be available for aws account.

You can generate new ones from your EC2 console via the url for your

<<account_user>>-https://console.aws.amazon.com/iam/home?region=us-east-2#/users/<<account_user>>?section=security_credentials.

:beginner: Plan:

terraform init && terraform plan -var aws_access_key=AKIAJBXBOC5JMB5VGGVQ -var aws_secret_key=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 -var count_vms=0 -var disk_sizegb=30 -var distro=centos7 -var key_name=testdwai -var elbcertpath=~/Downloads/testdwaicert.pem -var private_key_path=~/Downloads/testdwai.pem -var region=us-east-1 -var tag_prefix=k8snodes -out "run.plan"

:beginner: Apply:

terraform apply "run.plan"

:beginner: Destroy:

terraform destroy -var aws_access_key=<<ACCESS KEY>> -var aws_secret_key=<<SECRET KEY>> -var count_vms=0 -var disk_sizegb=30 -var distro=centos7 -var key_name=testdwai -var elbcertpath=~/Downloads/testdwaicert.pem -var private_key_path=~/Downloads/testdwai.pem -var region=us-east-1 -var tag_prefix=k8snodes --auto-approve

Create a HA k8s Cluster as IAAS

- One can create a Fully HA k8s Cluster using k3sup

curl -sLSf https://get.k3sup.dev | sh && sudo install -m k3sup /usr/local/bin/One can now use k3sup

-

Obtain the Public IPs for the instances running as such

aws ec2 describe-instancesor obtain just the Public IPs asaws ec2 describe-instances --query "Reservations[*].Instances[*].PublicIpAddress" --output=text -

one can use to create a cluster with first ip as master <pre>

k3sup install --cluster --ip <<Any of the Public IPs>> --user <<ec2-user or centos as per distro>> --ssh-key <<the location of the aws private key like ~/aws-terraform/yourpemkey.pem>></pre> -

one can also join another IP as master or node For master: <pre>

k3sup join --server --ip <<Any of the other Public IPs>> --user <<ec2-user or centos as per distro>> --ssh-key <<the location of the aws private key like ~/aws-terraform/yourpemkey.pem>> --server-ip <<The Server Public IP>></pre>

or as a simple script:

export SERVER_IP=$(terraform output -json instance_ips|jq -r '.[]'|head -n 1)

k3sup install --cluster --ip $SERVER_IP --user ec2-user --ssh-key 'Your Private SSH Key Location'--k3s-extra-args '--no-deploy traefik --docker'

terraform output -json instance_ips|jq -r '.[]'|tail -n+2|xargs -I {} k3sup join --server-ip $SERVER_IP --ip {} --user ec2-user --ssh-key 'Your Private SSH Key Location' --k3s-extra-args --docker

export KUBECONFIG=`pwd`/kubeconfig

kubectl get nodes -o wide -w

- One can create a Fully HA k8s Cluster using kubeadm

kubeadm initOne can now use weavenet and join other workers

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl apply -f "https://cloud.weave.works/k8s/net?k8s-version=$(kubectl version | base64 | tr -d '\n')"

Reporting bugs

Please report bugs by opening an issue in the GitHub Issue Tracker. Bugs have auto template defined. Please view it here

Patches and pull requests

Patches can be submitted as GitHub pull requests. If using GitHub please make sure your branch applies to the current master as a ‘fast forward’ merge (i.e. without creating a merge commit). Use the git rebase command to update your branch to the current master if necessary.

License

- Please see the LICENSE file for licensing information.

Code of Conduct

- Please see the Code of Conduct